We are thrilled to share the insightful outcomes of the first Think_Lab at the University of Graz, titled “AI in the Hot Seat: Exploring (un)Trust, Transparency, and Inclusivity.” This event was a collaboration between the Arqus European University Alliance and the Interdisciplinary Digital Lab of the University of Graz (IDea_Lab), bringing together over 20 participants from diverse academic disciplines. The workshop aimed to delve into the complex realm of AI, address the challenges surrounding trustworthiness, transparency, and inclusivity, and generate innovative ideas and research questions.

The Think_Lab workshop consisted of two engaging sessions, each focusing on distinct aspects of the overarching theme. The first session, “Dystopian Future: When AI Goes Wrong,” fostered deep discussions about responsible AI development, ethical considerations, and the critical need for human oversight and control. Participants embarked on an imaginative journey, envisioning alternative worlds where AI systems falter, and delved into the societal complexities and potential consequences. These discussions prompted thought-provoking insights and strategies to avoid or mitigate dystopian scenarios.

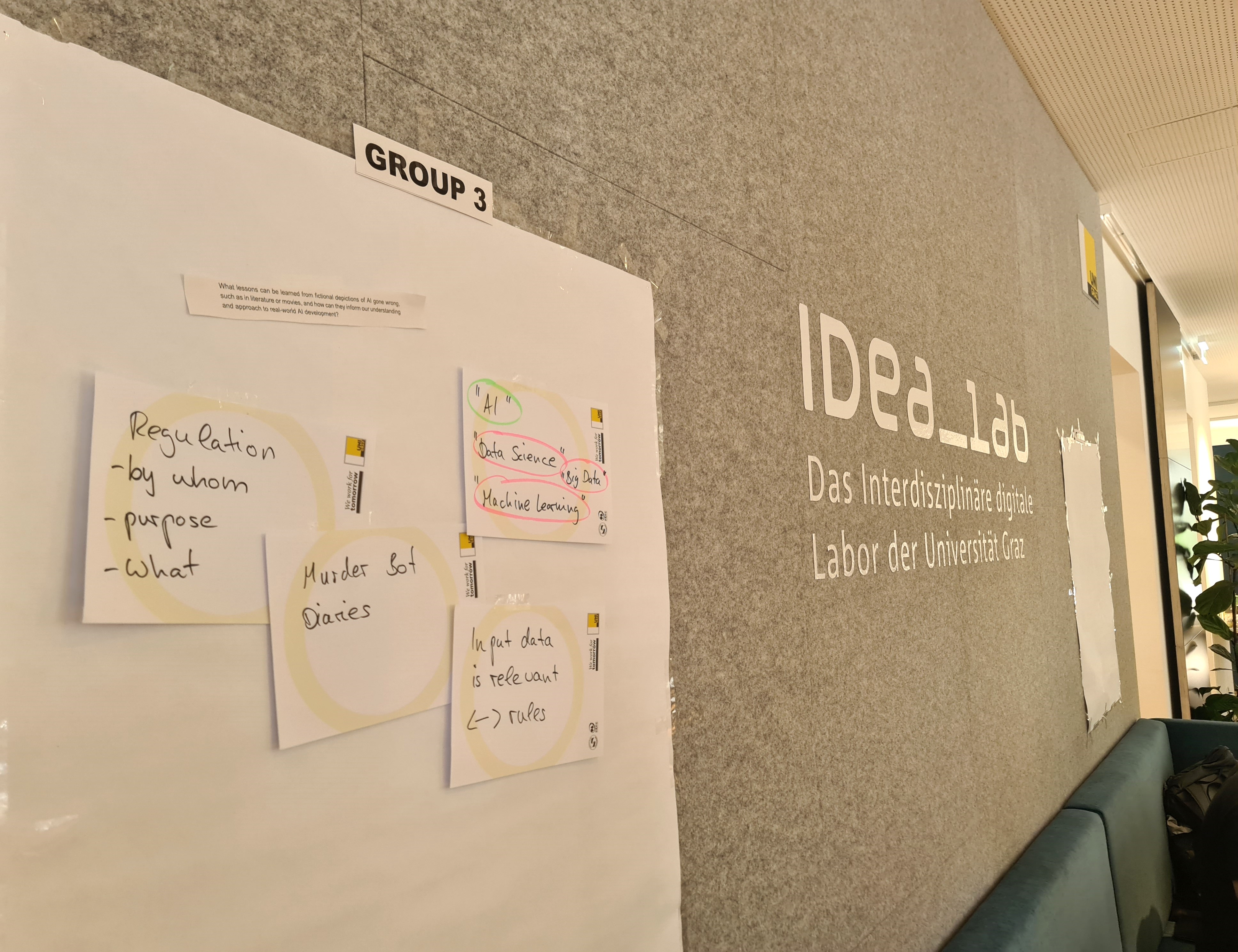

In the second session, individual groups engaged in focused discussions on specific topics related to AI’s trustworthiness and transparency. These topics included “Trust in AI Systems,” “Bias and Fairness in AI,” “Ethical Implications of AI,” “Human-AI Collaboration,” and “Socioeconomic Impact of AI.” Each group critically analyzed the challenges, identified key factors, and proposed strategies to address them. The goal was to foster inclusivity, fairness, and accountability in the development and deployment of AI systems, while ensuring their positive socioeconomic impact.

Throughout the Think_Lab workshop, participants showcased their interdisciplinary expertise and contributed valuable insights to the field of AI research. The event served as a platform to explore new ideas, generate research questions, and establish collaborations among researchers. Key outcomes included:

1. Identification of challenges and opportunities associated with untrustworthy AI systems

2. Strategies for assessing and addressing bias and other sources of untrustworthiness in AI systems

3. Recommendations for promoting the use of trustworthy AI systems in diverse domains

4. Exploration of ethical considerations and frameworks for responsible AI development and deployment

5. Insights into effective collaboration and cooperation between humans and AI systems, highlighting the potential benefits and challenges

6. Examination of the socioeconomic implications of widespread AI adoption and strategies to address associated concerns

The Think_Lab workshop on “AI in the Hot Seat: Exploring (un)Trust, Transparency, and Inclusivity” showcased the commitment of the academic community in addressing the complex challenges surrounding AI systems. By fostering interdisciplinary collaborations and thought-provoking discussions, the workshop aimed to contribute to the research on AI systems. We extend our gratitude to all the participants for their valuable contributions and look forward to further exploring the exciting landscape of AI research.